I wrote this in December 2024 but it sat as a draft until I rediscovered it in early 2026. I’ve backdated it as a record of where my head was at the time.

I’ve always considered myself a great refiner of other people’s ideas. Tell me your idea and I can instantly tell you what works and what doesn’t and give you lots of suggestions for refinement.

But give me a blank sheet of paper and a general topic and I’ll struggle to get traction. Even when I do, I feel the burden. It’s not fun.

AIs are fun.

Instead of struggling alone, I have an infinitely-patient intellectual partner for generating and refining ideas. And that’s just scratching the surface of what they can do now and what they will do in the future.

Why and how I use AI 🔗︎

Working in tech, I’ve been low-level aware of AI and AI-adjacent research for a long time. In the ’80s and 90s, I remember hype around ’expert’ systems. Then I remember a shift towards statistical modeling and machine learning (like spam filters and the Netflix Prize). Then I remember the game AIs: IBM Watson for Chess and AlphaGo. In 2019, GPT-2 came along hinting at what was to come. Then in 2020, the rudimentary reasoning capabilities of GPT-3 made it clear that something profoundly different was about to happen.

My interest in AI grew slowly, then suddenly.

By 2022, capabilities developed to the point where I was curious to participate, not just spectate. Looking back at my notes, I think I started with image generation – DALL-E and later Midjourney, initially as props for a D&D game I ran for my daughter. Then the arrival of ChatGPT 3.5 got me started actually using an LLM rather than just reading about the crazy things people were doing with them.

The more I used them, the more I was convinced that AIs – even short of AGI much less ASI – were going to be transformative on a scale most people weren’t imagining.

In the article AI and the Technological Richter Scale, Zvi Moshowitz described a Technological Richter Scale introduced in Nate Silver’s book On the Edge. A Level 7 is one of the leading inventions of the decade and has an impact on everyday lives, like “credit cards” or “social media”. A Level 8 is seismic, a technology of the century and broadly disruptive, like “automobiles”, “electricity” or “the Internet”. Zvi thinks AI is easily an 8 and likely much higher.

AI is on track to be a bigger deal than electricity.

Bigger than cars. Bigger than the Internet. And I agree that’s plausibly the low end of the impact it could have on humanity. Given that framing – AI as one of the most transformative technologies of my lifetime, on par with the Internet itself – my interest in AI is threefold:

-

There is already incredible “mundane utility” (as Zvi puts it) to be had with today’s AIs. As an engineer, doing something better or faster with tools is extraordinarily satisfying.

-

If there is something incredibly disruptive on the horizon, the more I know about it, the more I can anticipate and prepare. Even if we avoid absolute disaster (see p(doom)), the economic and societal changes will be at least on par with the industrial revolution and will happen over a much shorter timeframe.

-

I’m a parent of teens. If social media weren’t already bad enough, now they’re in a world being transformed by AI: ChatGPT cheating is rampant in schools; the future value and reward of education and intelligence itself is in question; whole job categories may vanish; and we face unknown political, economic, and social disruption. They need life advice that takes into account where things are going, not the rapidly irrelevant past.

Schools aren’t going to teach my kids what they need to know about AI.

Apropos of these changes, I now use an AI service for some mundane utility at least once a day, most days. As I write this, I pay for commercial access to three consumer services: ChatGPT, Claude.ai, and Midjourney. Claude is probably 90% of my AI activity. I’ve just started dabbling in API integrations and command-line access through the llm tool. I have yet to start with AI tools directly in my code editor, but that’s on the horizon.

Broadly, I currently use AI mostly for four things:

-

Wisdom of the crowd: When I’m looking for answers to questions, I now start with an LLM rather than a Google search. There is a risk of hallucinations, but this has gotten better, and is not really any different than dealing with misinformation (intentional or not) when checking results from a Google search. I tend not to use them to look for specific facts, but for synthesis questions like “why” or “how”. Often they come up with obscure things that I can then double check with a search that I wouldn’t have known how to find initially.

-

Textual analysis and transformation: One of my favorite tools is my “gister” that I use to summarize articles or PDFs to sift for novel ideas before deciding to commit to reading something in full. I also use ChatGPT to generate French Anki cards for my daughter much more efficiently than typing it all in by hand. I’ve also asked it to analyze some of my writing and suggest how to simplify it or amend the tone.

-

Software engineering support: Software is a huge field, with dozens of languages, frameworks, and technologies – all changing constantly. LLMs have been great at teaching me new things quickly, explaining things I haven’t studied before, and letting me ask all the “dumb questions” I have when figuring things out. I find them better at debugging odd situations than plugging error messages into Google and sifting the result (c.f. Wisdom of the Crowd).

-

Idea generation and refinement: As I mentioned in the opening, when I’m stuck doing something creative, I can prompt for variety of ideas and then mix/match/refine until I have something that clicks for me. This is also where generative art is great: when I have ideas for images beyond my meager artistic skill, I can iterate with an AI. This feels a lot like coding, where I express my ideas at a high level and the compiler turns it into executable machine code, except the ideas I express are “compiled” into an image. I iterate my image prompts not unlike debugging a subroutine until the outcome looks the way I want.

I learn a lot from seeing what others do.

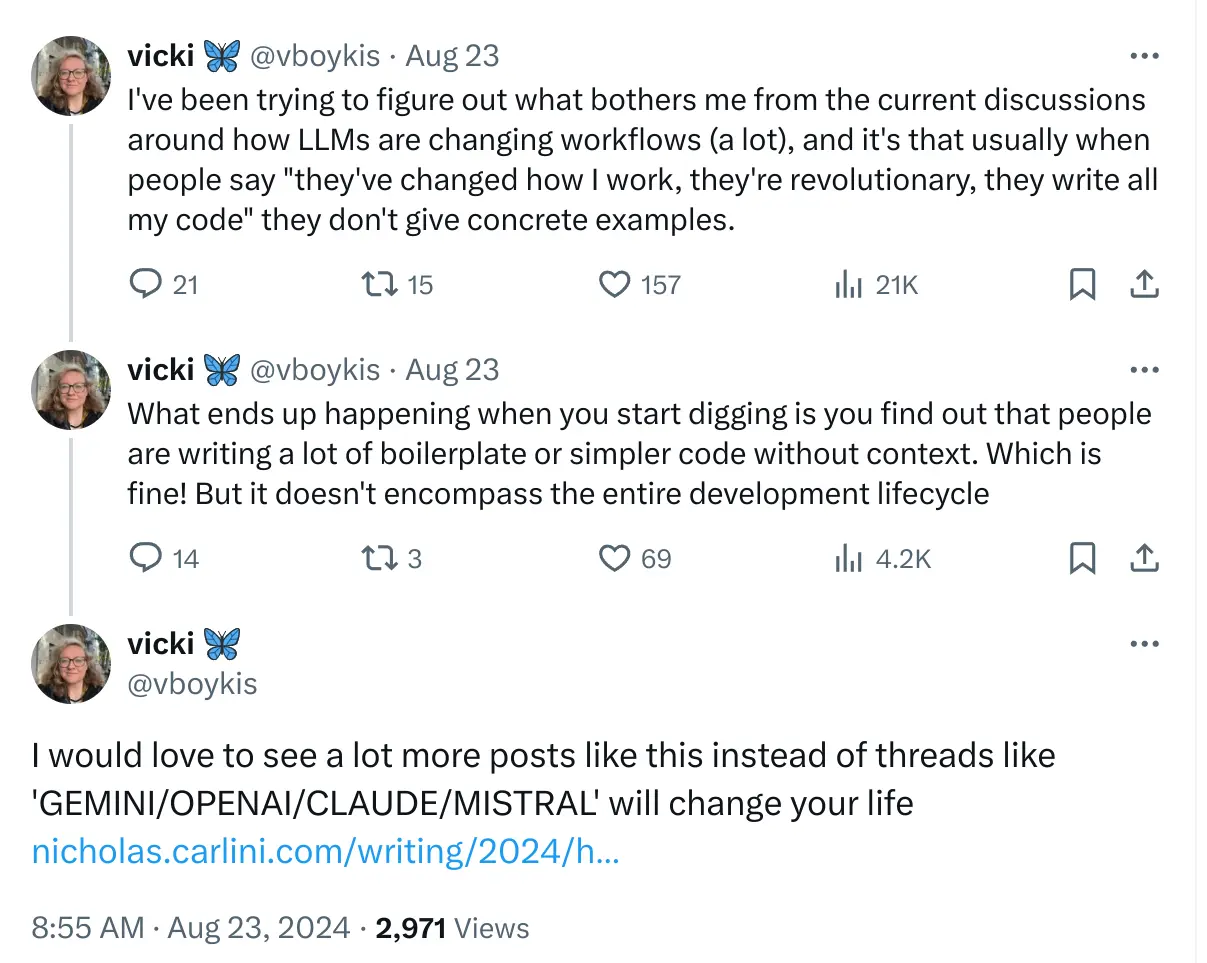

This tweet by Vicki Boykis in 2023 echoed my own feelings trying to sort out hype and trivial examples from real world uses for LLMs.

Vicki cited How I Use “AI” by Nicholas Carlini. That tweet got me started sharing my own experiment on a private discord with some tech friends and they shared back their own experiences.

Since then, I found Simon Willison’s weblog to be another great source for practical examples. I mentioned Zvi earlier, who covers AI and other topics at Don’t Worry About the Vase; it has many examples of mundane utility – some with actual details.

Reading these regularly reminded me of great advice I got long ago from swyx to learn in public. My plan is to start posting my own examples here on xdg.me. Many will be short examples in the Tips section, but I might have the occasional longer piece in Writing from time to time.

If you have a place where you publish your own mundane utilities, please drop me a line. I’d love to take a look!